Why this blog?

Automation is no longer a luxury – it’s a necessity. If your enterprise seeking a unified, modern approach to workflow automation, you’re in the right place. This blog breaks down how Github Actions can help streamline data engineering operations and bring scalable CI/CD driven efficiency to your data pipelines.

Whether you’re a data engineer, analyst, or business leader, this blog will help you understand how Github actions can help enabling faster delivery, cleaner workflows and better collaboration.

What if your ETL jobs, data validations and deployments could run themselves – straight from your Github repo? Many enterprises are still relying on cron jobs, manual scripts and open-source tools to manage their workflows. This blog explores how Github Actions – Github’s native CI/CD solution, offers a modern, unified way to automate your ETL pipelines. Whether you’re managing data transformations, running validations or craving for smooth deployment into production, Github Actions brings consistency and developer-friendly automation into your arsenal.

What are Github Actions?

Github Actions is a powerful CI/CD tool integrated directly into Github, allowing you to automate workflows triggered by events in your Github repository (e.g., code pushes, pull requests, tag releases etc.). You define these workflows using YAML configuration files, specifying what should happen and when.

Why Should Engineers care?

Traditionally, data engineers relied on tools like Airflow, Automic or cron for orchestration. This creates a dependency on developer to integrate the code with different tools and it gets difficult to maintain multiple tools for the developer. This is where Github Actions comes into picture.

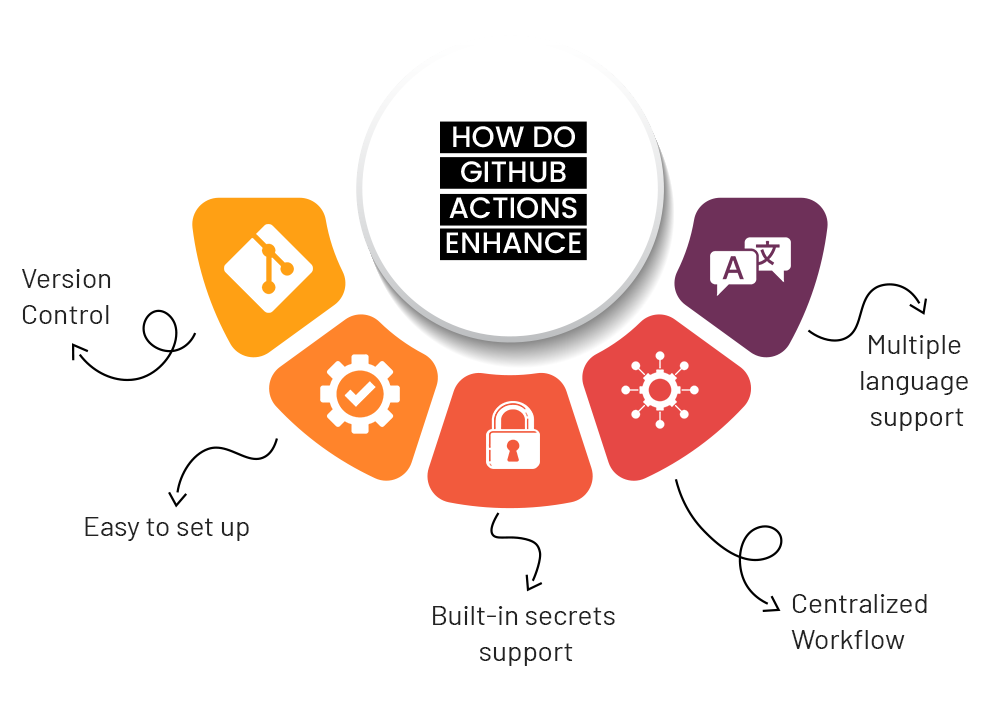

- Version Control: As the orchestration happens in the Github environment itself, maintaining code history and version becomes easy.

- Easy to set up: Create the YAML configuration to setup the workflow.

- Centralized Workflow: Keep ingestion, data validation and code tests in a single workflow.

- Built-in secrets support: Keep all your private keys in the Github environment itself.

- Multiple language support: Use Python, Java Script, Docker, etc. to write your code, Github supports it all.

Snowflake – Object Deployment:

Teams often manage Snowflake schemas, table, views and user permission manually, which can lead to drift between environments and a loss of version control. Deployments require continuous monitoring and must stay in sync across different environments. This process is prone to manual errors, but GitHub Actions helps automate it, ensuring consistent deployments and minimizing the risk of mistakes by managing workflows seamlessly across different environments.

Additionally, you can use Github Actions to trigger and automate the ingestion of data from various sources directly into snowflake using Python and SQL.

DBT – Transformation and Testing:

Transformation logic usually runs in isolated environment without version control. With Github Actions, you can automatically execute dbt run and dbt test whenever new code is pushed to GitHub, ensuring continuous integration with your codebase and database. Additionally, you can generate and deploy dbt documentation as part of your workflow, ensuring that the transformation logic is well-documented and accessible to all stakeholders. GitHub Actions also supports running data quality checks and monitoring the results, which helps in maintaining data integrity throughout the development lifecycle.

AWS + Terraform – Infrastructure Deployment:

Modifying AWS resources like S3, Lambda or DMS can be complex and manual changes with an admin role are often difficult to track and reproduce. Terraform is an infrastructure-as-code tool that helps manage and maintain the configuration of AWS services. You can use Github Actions to automatically apply all your infrastructure changes stored in Terraform files on pull request merge. This helps to keep your infrastructure version-controlled and auditable.

AI Integration with Github Actions

With the growing adoption of AI across enterprises, Github Actions can play a key role in automating AI workflows. You can use Actions to trigger model training, deploy inference APIs or even generate documentation using LLMs, all as part of your CI/CD pipelines. This enables a seamless code-driven orchestration of AI models alongside your data workflows, ensuring more insights and rich data to your stakeholders.

Final Thoughts

As data platforms become more complex and interconnected, the demand for scalable and automated workflows has become crucial. Github Actions offers a powerful and flexible way for data engineers and platform teams to integrate infrastructure, transformations, data quality and even AI model training into a single, unified pipeline.

Whether you’re managing Snowflake deployments, transforming and testing data quality with dbt, provisioning infrastructure as code with Terraform or training ML workflows on AWS, Github Actions empowers your team to move faster, reduce manual errors and bring Devops best practices to your data pipelines.

Are you looking to upgrade your data pipeline deployments? Talk to our experts!