Artificial intelligence (AI) is transforming how organizations manage and govern data. As AI permeates across industries, a profound shift is underway from traditional data governance to a more evolved framework that unifies data and AI governance. This evolution is critical to harness AI’s potential while governing its risks and complexities.

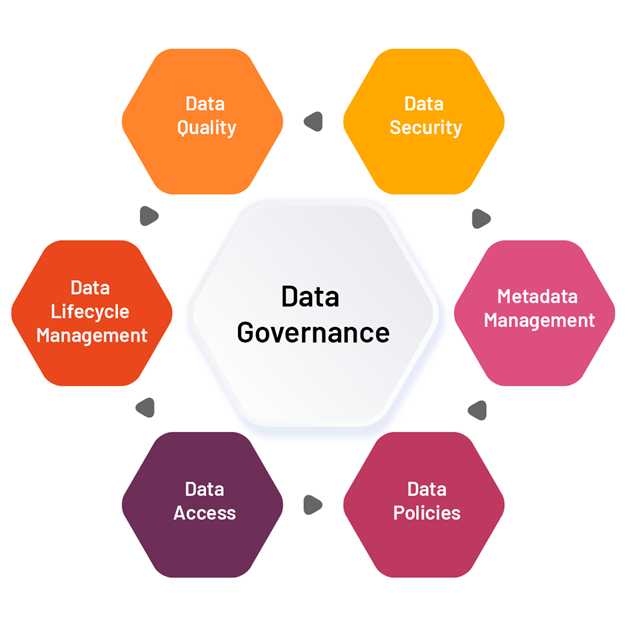

The Pillars of Traditional Data Governance

Data governance provides the overarching management framework to ensure data availability, usability, consistency, security and alignment with policies across the enterprise data ecosystem.

It revolves around six key pillars:

- Data Quality: Ensuring accuracy, completeness, reliability and validity of data for business usage through validation, monitoring and remediation processes.

- Data Security: Safeguarding data from unauthorized access and breaches through controls like encryption and cybersecurity measures.

- Metadata Management: Cataloging and documenting data context, meaning, structure, interrelationships and lineage across systems.

- Data Policies: Establishing policies and standards aligned to regulations for data acquisition, storage, retention, usage, privacy and lifecycle management.

- Data Access: Provisioning authenticated, policy-based access to enterprise data by various personas across lines of business.

- Data Lifecycle Management: Managing data from inception through usage till retirement across the data supply chain.

While this traditional governance model serves structured data scenarios reasonably well, AI introduces new challenges.

The AI-Induced Disruptions

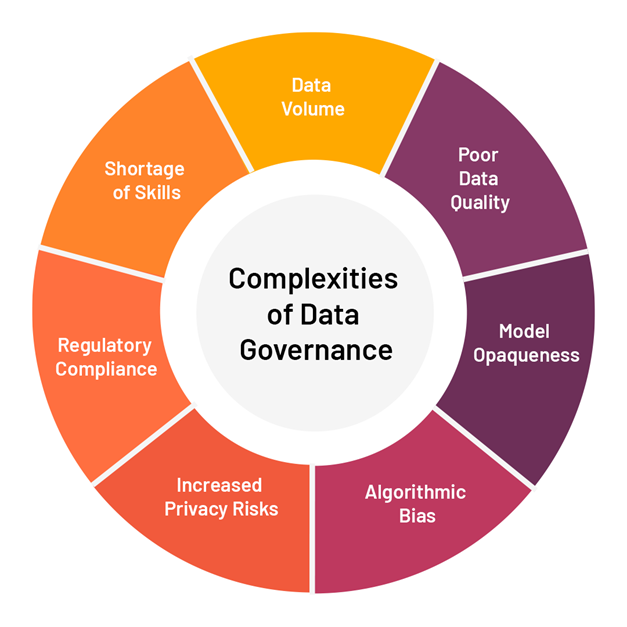

AI systems are only as effective as the data they are trained on. But governing AI data poses several complexities:

- Data Volume: AI training data can comprise billions of points from diverse sources, challenging traditional management methods.

- Poor Data Quality: Low-quality, biased, or inconsistent training data significantly impacts the performance and fairness of AI models. Ensuring consistently high data quality is exponentially harder at AI data volumes.

- Model Opaqueness: The inner workings of complex AI models are often black boxes, making specific decisions opaque and posing Governance Challenges in AI.

- Algorithmic Bias: Training data containing human biases can lead AI models to make prejudiced and unethical decisions. Continuously detecting and managing bias is crucial.

- Increased Privacy Risks: The depth of insights uncovered by AI from data patterns heightens privacy concerns. Data anonymization also provides limited protection against re-identification.

- Regulatory Compliance: Increased use of consumer data by AI applications raises compliance requirements like GDPR, CCPA etc.

- Shortage of Skills: Governing AI requires a blend of data governance and data science skills which are scarce, hampering oversight of AI systems.

The Evolution to Unified Data and AI Governance

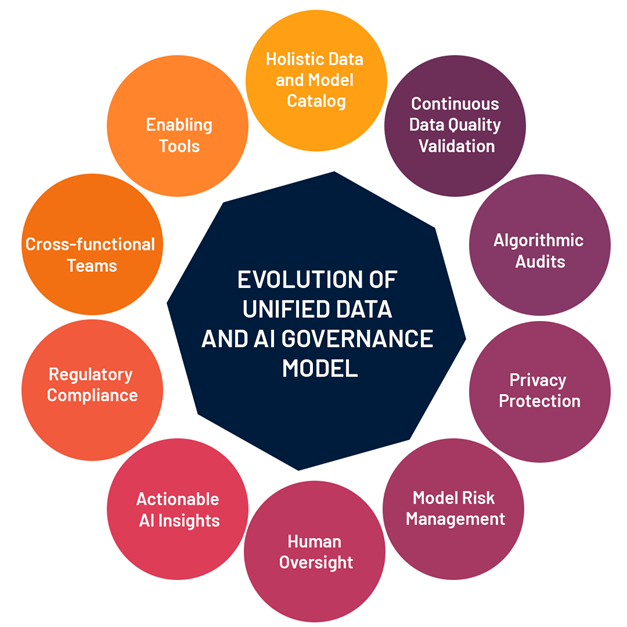

Governing AI necessitates extending traditional data governance to encompass AI’s unique risks and requirements.

Key aspects of this Unified Data and AI Governance model include:

- Holistic Data and Model Catalog: A comprehensive catalog of all data and AI model metadata providing visibility into relationships, lineage and meaning to enhance traceability.

- Continuous Data Quality Validation: Multilayered data quality checks using statistical analysis, rules-based profiling etc., to ensure training data and model input consistency.

- Algorithmic Audits: Proactive bias assessment by testing model outcomes across diverse datasets and user groups.

- Privacy Protection: Deploying data minimization, anonymization, federated learning and encryption to mitigate privacy risks.

- Model Risk Management: Formal evaluation of risks across the AI model lifecycle pre-deployment to ensure controls adherence.

- Human Oversight: Maintaining meaningful human oversight of data and models across the lifecycle.

- Actionable AI Insights: Providing visibility into key metrics on model accuracy, data quality, bias rate and AI vs. human decision ratios.

- Regulatory Compliance: Embedding compliance to data protection and AI regulations within data sourcing, model development and operations.

- Cross-functional Teams: Developing blended teams encompassing data engineers, scientists, and governance experts.

- Enabling Tools: Deploying integrated tools spanning metadata, data quality, bias detection and model risk management.

Implementing a unified approach enables continuous assessment and improvement across the AI data and model lifecycle while delivering the transparency, explainability and risk mitigation imperative to scale AI responsibly.

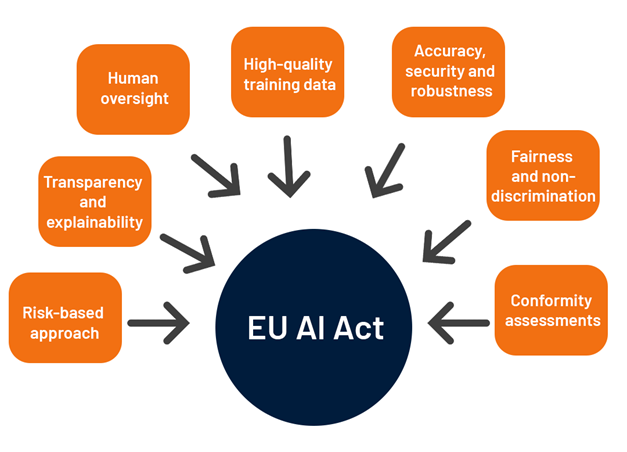

Considerations from the EU AI Act

The proposed EU AI Act aims to create a comprehensive framework to govern the development and use of trustworthy AI within the EU. It is the most ambitious and comprehensive legislation globally attempting to regulate AI.

Some key requirements include:

- Risk-based approach: Stringent governance for high-risk AI systems, like those used in critical infrastructures or employment decisions, versus softer oversight for minimal-risk AI systems.

- Transparency and explainability: Requirements for high-risk AI systems to be transparent and explain their functionality and decisions to users.

- Human oversight: Appropriate human oversight and controls must be enabled for high-risk AI systems to prevent unacceptable harm.

- High-quality training data: Relevant, representative, error-free and complete training data must be used. Ongoing data governance is imperative.

- Accuracy, security and robustness: Achieving benchmark levels of accuracy, security and robustness proportionate to the risks for high-risk AI systems.

- Fairness and non-discrimination: Proactively testing for and avoiding unfair bias in training data or decisions.

- Conformity assessments: Mandatory conformity assessments for high-risk AI systems before deployment. Continuous risk monitoring is also mandated.

By proactively embedding considerations like these into their unified governance model, organizations can ensure Data Compliance Standard with impending regulations like the EU AI Act while scaling AI responsibly.

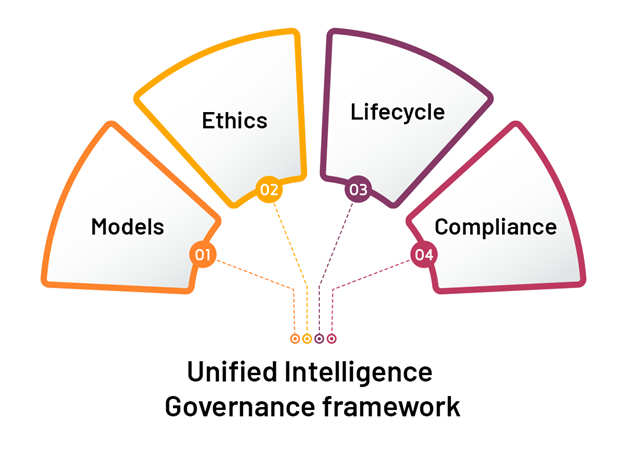

The Unified Intelligence Governance Framework

The Unified Intelligence Governance framework provides comprehensive guidance on managing and utilizing data and intelligence technologies responsibly within an organization. It outlines decision rights, accountabilities, principles, policies and procedures for the ethical and accountable use of data and intelligence models across their lifecycle.

This framework bridges concepts from traditional data governance focused on data, people, process and policies with modern AI governance requirements related to models, ethics, lifecycle and compliance. It shifts the orientation from just data to AI models, from people to ethics, from process to lifecycle, and from policies to accountability. This holistic approach enables integrated governance of both data and AI.

Key focus areas of the Unified Intelligence Governance framework include:

- Models: Governing the responsible and ethical development, deployment and monitoring of AI systems.

- Ethics: Ensuring AI systems align with organizational values and prevent unacceptable harm.

- Lifecycle: Managing data and models responsibly across the entire lifecycle from creation to retirement.

- Compliance: Embedding controls to enforce policies and external regulations related to data and AI.

By bridging traditional data governance and modern AI governance, this integrated approach provides the foundation for scaling AI safely and responsibly.

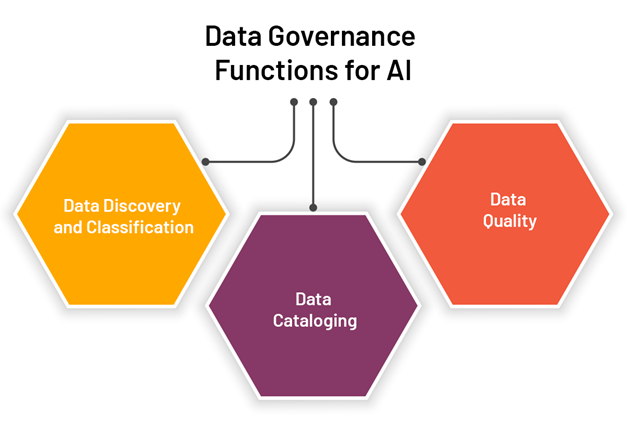

Examples of Evolving Data Governance Functions for AI

Integrating AI transforms how core data governance functions must be approached:

Evolving Traditional Data Governance in the Age of Artificial Intelligence

- Data Discovery and Classification: The need for comprehensive and automated data discovery and precise tagging becomes critical to find, understand and prepare data for AI training. Metadata capture for AI-generated data also becomes imperative.

- Data Cataloging: Beyond static documentation, active metadata that provides real-time context on upstream data changes impacting AI systems becomes vital. AI models and their logic also require extensive metadata capture to ensure traceability and transparency.

- Data Quality: Continuous validation through statistical analysis and monitoring for drift is crucial to ensure consistently high quality data feeding AI systems. Data observability strategies enabled by tools are imperative.

These examples illustrate how fundamentals like discovery, cataloging and quality pivot significantly to enable effective AI governance, requiring integrated modernization.

Realizing Responsible Data and AI Governance

AI holds tremendous potential as a transformative technology. But its sustained impact hinges on development and application in a responsible and ethical manner. It makes comprehensive data and AI governance central to unlocking AI’s full value.

By implementing integrated governance spanning training data, model development, and operational deployment, organizations can uphold transparency, accountability, and compliance. It paves the way for fair, balanced and socially beneficial AI.

Through continuous governance evolution centered on human oversight and cross-functional orchestration, the promise of AI can be realized. Purpose-driven governance provides the cornerstone for engendering stakeholder trust in AI systems.

The voyage to responsible and ethical AI requires sustained commitment from organizations across policy, process, people and technology dimensions. An investment like this will prove invaluable in securing AI’s role as an equitable digital accelerator for both business and society.

FAQ’s

What is data governance, and why is a framework important?

Data governance = managing your data’s quality, security, and usefulness. Frameworks provide a structured plan, outlining key areas like data quality, security, and access control for consistent management.

What are some data governance tools, and how do they support AI?

Tools like metadata management and data quality monitoring help with Unified Intelligence Governance by automating tasks and giving better visibility into data and AI practices.

Are there different data governance definitions, and how does it apply to AI?

Yes, definitions may vary slightly, but all focus on managing data effectively. For AI, the massive amount of training data requires a more comprehensive approach. Unified Intelligence Governance builds on existing principles by including AI-specific aspects like model oversight and lifecycle management.

How does the EU AI Act impact data governance?

The EU AI Act introduces a risk-based approach, requiring stricter governance for high-risk AI systems (e.g., critical infrastructure) compared to minimal-risk ones. Organizations should consider these requirements when building their unified data and AI governance model to ensure compliance.

What are the key differences between traditional data governance and Unified Intelligence Governance?

Traditional data governance focuses on data, people, processes, and policies. Unified Intelligence Governance expands on this by including models, ethics, lifecycle management, and compliance specific to AI. It shifts the focus from data to models, people to ethics, processes to lifecycle, and policies to accountability.

How can organizations implement data discovery and classification for AI?

Traditional methods might not suffice. Unified governance requires a more comprehensive and automated approach to data discovery and tagging. This helps identify relevant data for AI training and understand its context for proper preparation. Additionally, capturing metadata for AI-generated data becomes crucial.

How does data quality change in the context of AI governance?

Data quality is even more critical for AI. Unified governance necessitates continuous data validation using statistical analysis and drift monitoring to ensure consistently high-quality data feeding AI systems. Data observability tools can be valuable in this process.

What are some of the benefits of implementing Unified Data and AI Governance?

- Scale AI responsibly by mitigating risks.

- Uphold transparency and explainability in AI decisions.

- Ensure compliance with data protection regulations and the EU AI Act.

- Build trust in AI systems through ethical and accountable development.