Why this blog?

Data engineering lays the foundation for data science and analytics by integrating in-depth knowledge of data technology, reliable data governance and security, and a solid understanding of data processing. Data engineers manage data pipelines, i.e. the infrastructural designs for modern data analytics, to enable smooth data analysis operations.

With Amazon Web Services (AWS), data engineers can create data pipelines, manage data transfer and ensure efficient data storage.

Now, let us look at the AWS services used to build data engineering pipelines, frameworks and end-to-end workflow integrations:

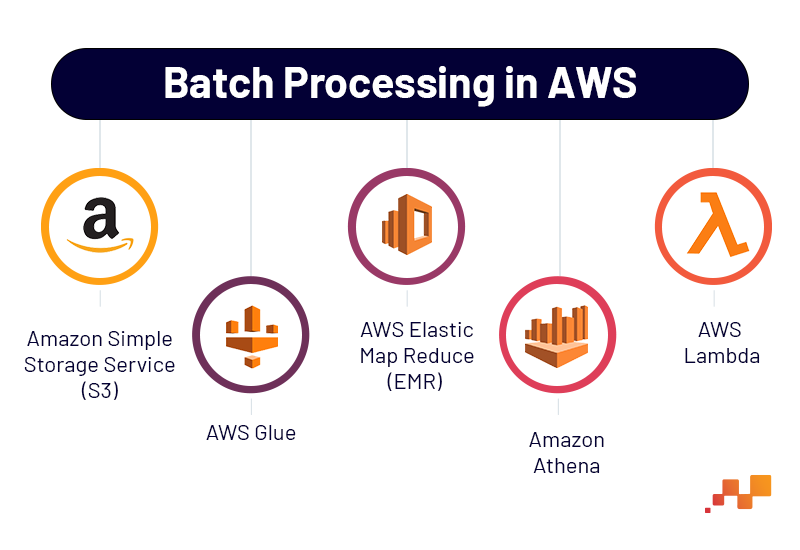

Batch Processing

Amazon Simple Storage Service (S3) is a data store that can store any amount of data from across the internet. As it is an incredibly scalable, fast and affordable option, data engineers have the flexibility to duplicate their S3 storage across different Availability Zones with Amazon S3.

AWS Glue is a fully managed ELT (Extract, Load and Transform) service to easily and cost-effectively process, enhance and migrate data between different data stores and data streams. Data engineers can interactively analyze and process the data using AWS Glue Interactive Sessions. Data engineers can visually develop, execute and monitor ETL workflows in AWS Glue Studio with a few clicks. Glue uses Spark and can support parallel processing of jobs and serverless processing.

AWS Elastic Map Reduce (EMR) is one of the primary AWS services for developing large-scale data processing that utilizes Big Data technologies such as Apache Hadoop, Apache Spark, Hive, etc. Data engineers can use EMR to launch a temporary cluster to run any Spark, Hive or Flink task. It allows engineers to define dependencies, establish a cluster configuration and identify the underlying EC2 instances.

Amazon Athena is an interactive query tool to easily assess data in Amazon S3 with SQL. Data engineers can use Athena to gain some insights from the data once the metadata has been added to the Data Catalog. When accessing GB of data in Parquet format with strong partitions, engineers typically get results within seconds.

AWS Lambda is an AWS service for serverless computing that runs your code in response to events and effortlessly manages the underlying computing resources. Lambda is helpful when you really need to gather raw data. Data engineers can develop a Lambda function to access an API endpoint, get the result, process the data and store it in S3 or DynamoDB

Evolving Data Stewardship Roles for Data and AI Governance

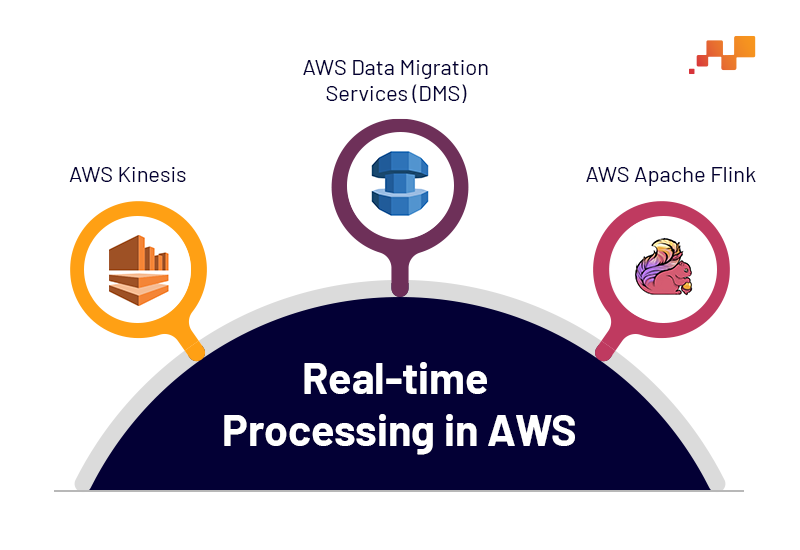

Real-time Processing

AWS Kinesis offers multiple managed cloud-based services to collect and analyze streaming data in real time. Data engineers use Amazon Kinesis to create new streams, easily specify requirements and start streaming data. In addition, Kinesis allows engineers to retrieve and analyze data immediately instead of waiting for a data output report.

AWS Data Migration Services (DMS) is a managed migration and replication service that helps move database and analytics workloads to AWS quickly, securely, and with minimal downtime and no data loss.

AWS Apache Flink is a streaming dataflow engine that can be used for real-time stream processing of high-throughput data sources. Flink supports event timing semantics for out-of-order events, exact once semantics, backpressure control, and APIs optimized for writing, streaming and batch applications. Amazon EMR supports Flink as a YARN application, so you can manage resources along with other applications within a cluster.

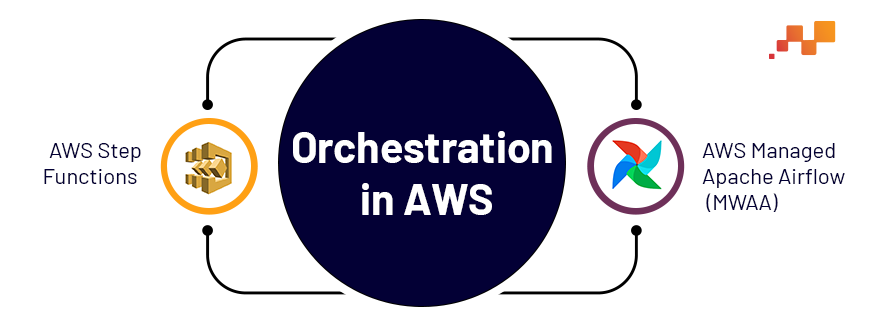

Orchestration

AWS Managed Apache Airflow (MWAA) orchestrates workflows using directed acyclic graphs (DAGs) written in Python. You provide MWAA with an Amazon Simple Storage Service (S3) bucket containing your DAGs, plugins and Python requests. You can then run and monitor your DAGs using the AWS Management Console, a command line interface (CLI), a software development kit (SDK), or the Apache Airflow user interface (UI).

AWS Step Functions is a visual workflow service that helps developers use AWS services to build distributed applications, automate processes, orchestrate microservices, and create pipelines for data and machine learning.

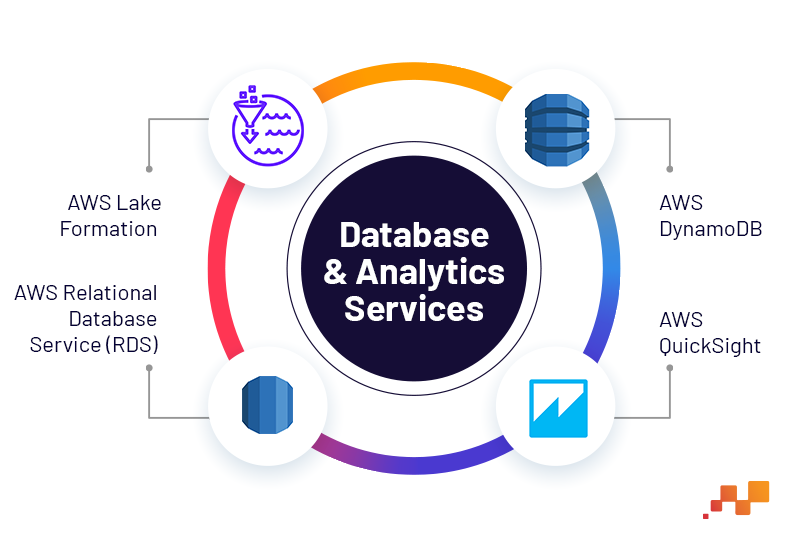

Database and Analytics

AWS Lake Formation centralizes the management of permissions for your data and facilitates sharing within your organization and externally. Lake Formation helps you move data into the Amazon Simple Storage Service (S3) data Lake, cleanse and classify data using machine learning algorithms, and secure access to your sensitive data with granular controls at the column, row, and cell level once it has been collected and cataloged.

AWS Relational Database Service (RDS) is a collection of managed services that simplify the setup, operation and scaling of databases in the cloud. It is a managed SQL database service provided by Amazon Web Services (AWS). Amazon RDS supports a range of database engines for storing and organizing data. It also helps with relational database management tasks such as data migration, backup, recovery and patching.

AWS DynamoDB is a fully managed NoSQL database service that provides fast and predictable performance with seamless scalability. With Amazon DynamoDB, you can create a database table that can store and retrieve any amount of data and serve any level of requirement. DynamoDB supports three data types (numbers, strings and binary data), in both scalar and multi-valued sets. It supports document storage such as JSON, XML or HTML in these data types. Tables do not have a fixed schema, so each data element can have a different number of attributes.

Advanced Prompt Engineering with ChatGPT Frameworks

AWS QuickSight empowers data-driven organizations with unified business intelligence (BI) at scale. It allows you to create data visualizations and dashboards for better business decisions. Amazon QuickSight can collect data from a variety of sources, including Amazon Athena, Aurora, AWS Redshift and more.

Data Cataloging

Glue Crawlers are scheduled or on-demand jobs that can query any data store to extract schema information and store the metadata in the AWS Glue Data Catalog. Glue crawlers use classifiers to specify the data source to crawl.

Glue Catalog serves as a metadata store for AWS services and any services outside of AWS that are compatible with a Hive metastore. It tracks runtime metrics and stores the indexes, locations of data, schemas, etc. Basically, all ETL jobs that are executed in AWS Glue are tracked. All this metadata is stored in the form of tables, with each table representing a different data store.

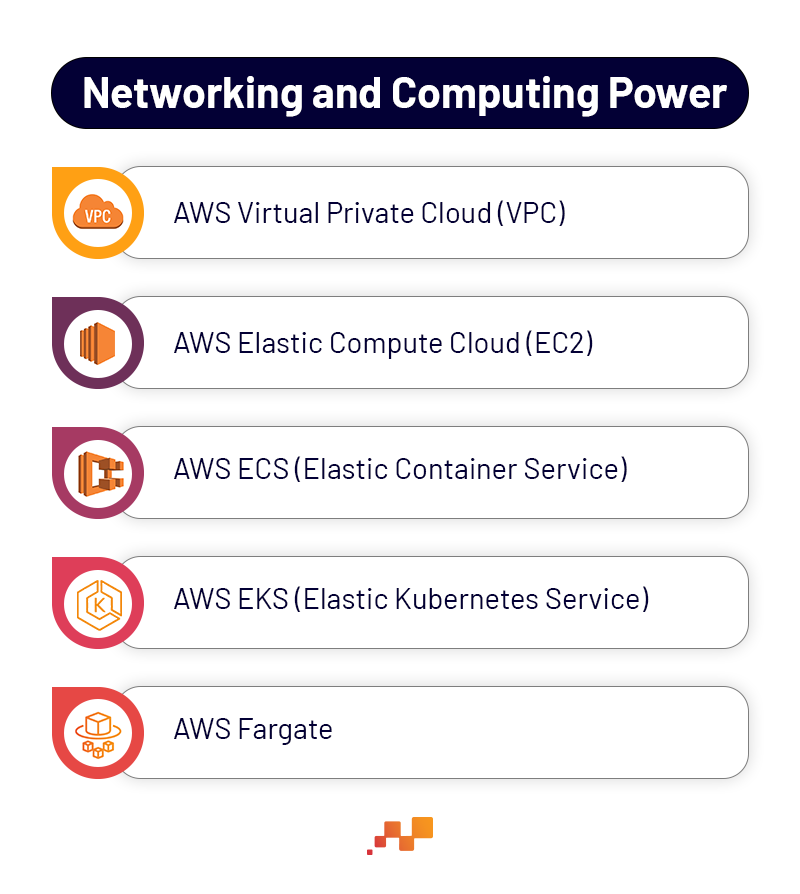

Networking and Computing Power of AWS

AWS Virtual Private Cloud (VPC) is a virtual network associated with your AWS account. It is logically isolated from other virtual networks in the AWS cloud. We can define an IP address range for VPC, add subnets and gateways and assign security groups. A subnet is a range of IP addresses in your VPC.

AWS Elastic Compute Cloud (EC2) is simply a virtual server in the terminology of Amazon Web Services. With an EC2 instance, AWS subscribers can request and provision a computer server within the AWS cloud.

AWS ECS (Elastic Container Service) is a fully managed container orchestration service that helps you deploy, manage and scale containerized applications more efficiently. It works seamlessly with AWS, offering a simple way to run containerized tasks in the cloud or on your own servers. Plus, it comes with advanced security features through Amazon ECS Anywhere.

AWS EKS (Elastic Kubernetes Service) is a managed Kubernetes service for running Kubernetes in the AWS cloud and on-premises data centers. Amazon EKS automatically manages the availability and scalability of Kubernetes control nodes, which are responsible for scheduling containers, managing application availability, storing cluster data, and other important tasks.

AWS Fargate is a computing engine for containers that work with both Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes (EKS). It is a serverless, paid computing engine that allows you to focus on building applications without having to manage servers.

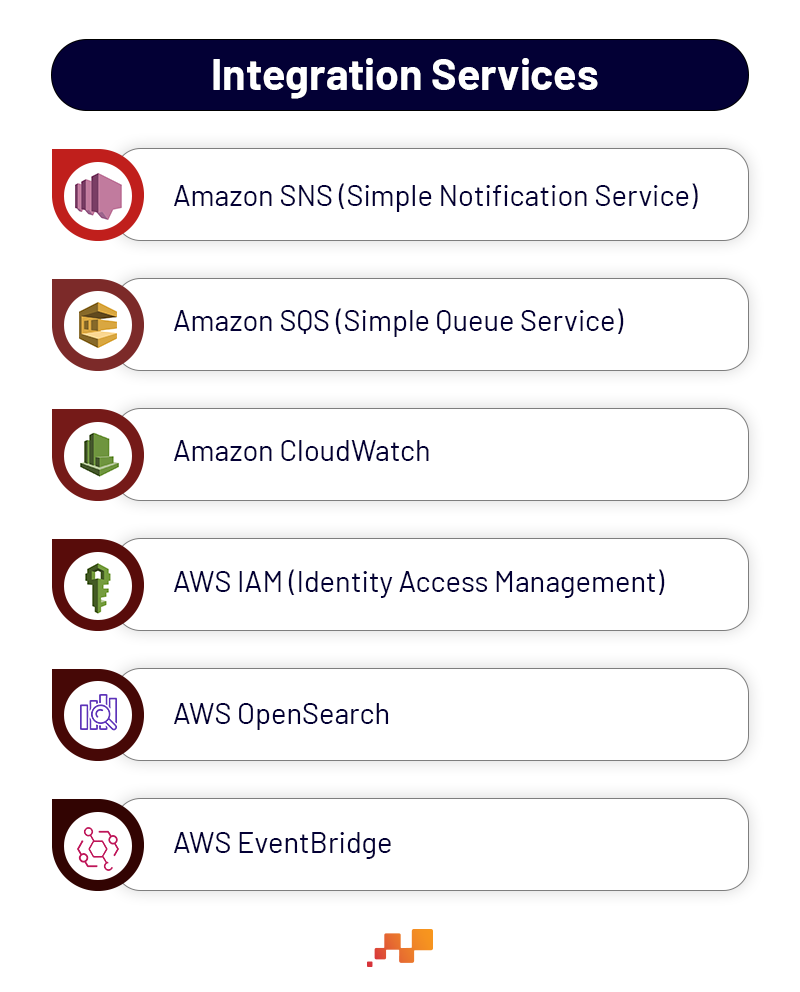

Integration Services (logging, monitoring, queues)

Amazon SNS (Simple Notification Service) is a web service that simplifies the setup, operation and sending of notifications from the cloud. It is a fully managed pub/sub service for A2A and A2P messaging.

Amazon SQS (Simple Queue Service) is a message queue service that is used by distributed applications to exchange messages via a polling model and can be used to decouple sending and receiving components.

Amazon CloudWatch is a service that monitors applications, responds to performance changes, optimizes resource usage, and provides insight into operational status. By collecting data across AWS resources, CloudWatch provides insight into system-wide performance and allows users to set alerts, automatically respond to changes, and get a unified view of operational health.

With AWS IAM (Identity Access Management), we can define who or what can access services and resources in AWS, centrally manage fine-grained permissions, and analyze access to refine permissions in AWS. It is a security and business discipline that involves multiple technologies and business processes to help the right people or machines access the right resources at the right time and for the right reasons, while preventing unauthorized access and fraud.

AWS OpenSearch makes it easy for you to perform interactive log analysis, real-time application monitoring, website search and much more. OpenSearch is an open source, distributed search and analytics suite derived from Elasticsearch.

AWS EventBridge provides simple and consistent ways to ingest, filter, transform and deploy events so you can quickly build applications. It is designed for high-performance, low-latency, high-throughput processing of real-time data. It enables organizations to quickly and easily integrate applications, services and data across multiple cloud environments. It is a fully managed service that provides a reliable, scalable and secure way to process and route events between applications and services. On the other hand, Kafka is designed for high availability and supports various features such as replication and fault tolerance to ensure the availability and durability of data.